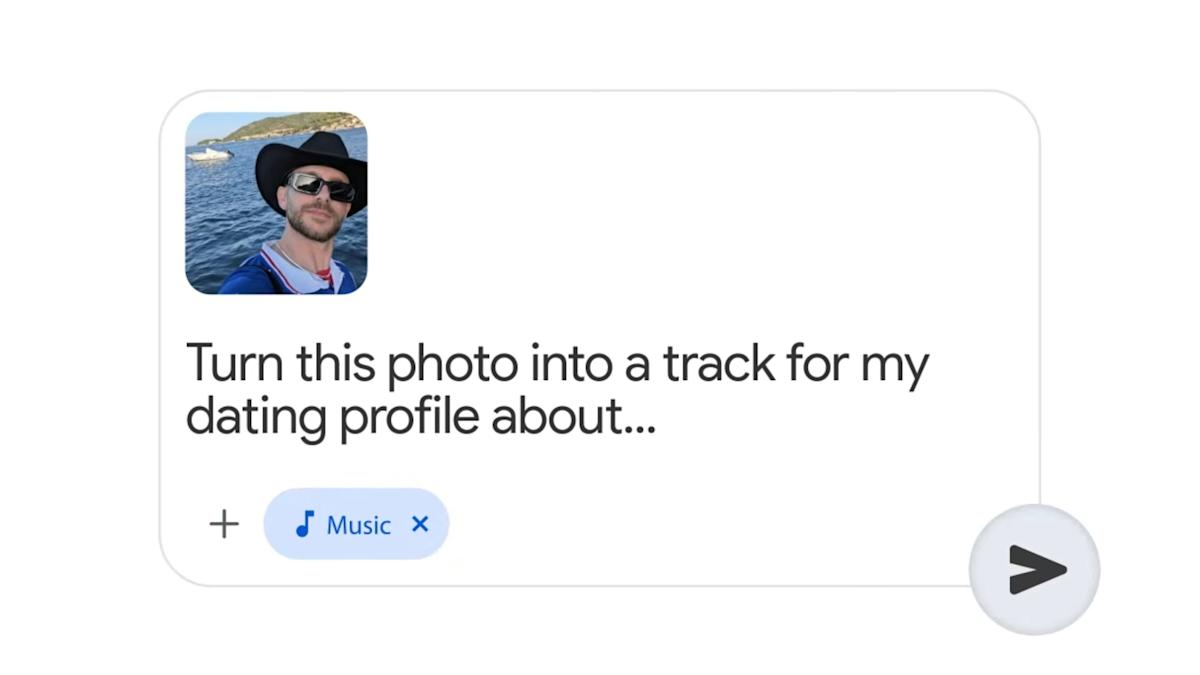

I was hanging out at a local coffee shop just a few days ago, trying to explain a very specific “vibe” to a friend. You know the feeling—where you have a sound in your head that’s so niche it feels almost impossible to track down? I was describing something like “chill lo-fi jazz, but layered with a really chunky 80s synth-pop bassline… specifically for a cat’s first birthday party.” It’s exactly the kind of ridiculous, hyper-specific request that, until about five minutes ago, would have required a music theory degree, a high-end Ableton setup, and way too much free time. But as of this week, those technical barriers have officially crumbled. According to Engadget—the site that basically lives and breathes every new development in consumer electronics—Google has finally baked its Lyria 3 model directly into Gemini. This means anyone can now whip up a 30-second musical sketch just by typing out a simple prompt and hitting “enter.”

It’s a pretty wild moment for anyone who’s been watching the generative AI space explode over the last couple of years. We’ve already gotten used to the text generators, we’ve seen the surreal (and sometimes terrifying) AI images, and we’re even starting to navigate the uncanny valley of AI-generated video. But music? Music feels different. It’s visceral. It’s deeply mathematical yet purely emotional. And while Google’s latest attempt at these “musical approximations” probably isn’t going to have Taylor Swift looking over her shoulder just yet, it marks a massive shift in how we’re going to define “content” versus actual “composition” moving forward. It’s not just about making a sound; it’s about making a mood on demand.

Why the 30-second “Snippet” is the new gold standard for creators

The engine under the hood of this update is Lyria 3, a model that Google claims is significantly more “musically complex” than anything they’ve put out before. For the average person just messing around with the app, this means you can ask for a “comical R&B slow jam about a lonely sock trying to find its match” and actually get something back that sounds like it belongs on a late-night radio station—at least for half a minute. But the real “wow” factor isn’t just the novelty of a machine singing about laundry; it’s the level of control you have. You can get surprisingly granular with it, tweaking the tempo or even specifying the style of the drumming. You’re essentially acting as the creative director for a digital session band that never gets tired and doesn’t ask for a cut of the royalties.

And let’s be honest—that 30-second limit isn’t some technical failure; it’s a very deliberate, very savvy choice. In our current digital landscape, which is almost entirely dominated by TikTok, Instagram Reels, and YouTube Shorts, 30 seconds isn’t a limitation at all. It’s the standard unit of currency. By integrating this tech directly into YouTube’s “Dream Track” feature, Google is basically giving creators a toolkit to bypass the soul-crushing search for royalty-free background music. Why would you spend three hours digging through a generic library of “happy ukulele” tracks when you can just manifest a bespoke backing track that perfectly fits your video’s specific comedic timing? It’s a game-changer for the workflow of a modern influencer.

If we look at the broader industry, the numbers tell a fascinating story. A 2024 report by the IFPI pointed out that the global recorded music market grew by a solid 10.2%, mostly thanks to paid streaming. However, the industry is now bracing itself for a massive flood of what people are calling “functional music”—those tracks designed purely for background noise, focus, or quick social media snippets. Lyria 3 is the high-octane engine for that flood. It’s the democratization of the “vibe,” and honestly, it’s going to make the lives of traditional stock music composers a whole lot more complicated in the coming years.

“The challenge for AI isn’t just making a sound that resembles music; it’s capturing the intentionality that makes a listener feel something. We’re getting closer to the sound, but the soul is still in the prompt, not the processor.”

— Marcus Thorne, Digital Media Analyst

Album art by “Nano Banana” and the weird, fever-dream world of AI lyrics

What I find particularly interesting—and a little bit quirky, in that classic Google sort of way—is the whole ecosystem they’ve built around these tracks. You aren’t just getting a raw audio file; you’re getting a full, polished package. Gemini can now pair these musical clips with custom album art generated by their “Nano Banana” image model. It’s a complete, AI-generated micro-release ready for the digital age, all created in seconds. But as with everything in the generative world, there’s a bit of a catch. While the instrumentals are surprisingly convincing and well-produced, the lyrics often fall headfirst into that “strange and corny” bucket we’ve all come to expect when LLMs try to be poetic or soulful.

I’ve spent some time listening to a few samples, and while the production quality is undeniably high, the actual songwriting often feels like a vivid fever dream. It’s technically correct—the rhymes are there, the meter is fine—but it’s emotionally vacant. It’s the musical version of those AI-generated hands with six fingers; at first glance, it looks totally fine, but the longer you listen, the more you realize something is just… off. But maybe that’s the whole point? For a silly song about a sock, that “corny and strange” vibe might actually be the exact aesthetic you’re aiming for. It adds a layer of irony that works for internet humor.

According to a 2025 Statista survey, nearly 40% of creators under the age of 25 have already used some form of generative AI to help them out in their creative process. For this demographic, that slightly “machine-made” quality isn’t a bug or a flaw; it’s actually a feature. We’re watching the birth of a new genre of digital folk art, where the human’s role has shifted from being the one who plays and sings to being the one who curates and prompts. It’s a different kind of creativity, but it’s creativity nonetheless.

Can you hear the watermark? The tech trying to keep AI music honest

Of course, we can’t really talk about AI-generated music without addressing the “fake” factor and the ethics behind it. We’ve all seen the absolute chaos caused by those AI tracks that mimic famous artists with scary accuracy. Google is clearly trying to get ahead of the inevitable backlash by embedding SynthID watermarks into every single Lyria 3 output. This isn’t just a simple metadata tag that a savvy user can strip away in two seconds; it’s a watermark embedded directly into the audio that is inaudible to the human ear but identifies the track as machine-made to any scanning software.

Last year, back at Google I/O 2025, the company made a big deal out of its SynthID Detector—a tool built to help platforms and everyday users identify AI content across text, images, and now audio. It’s a bold move toward transparency, but it also highlights the massive arms race currently happening between content creators and detection tools. As these musical models get better and more nuanced, the watermarks are going to have to become incredibly sophisticated to keep up. It’s a strange world we’re living in when we feel like we need “proof of humanity” for the songs we listen to while we’re doing the dishes or driving to work.

Is this the end of the “real” musician as we know it?

Personally, I don’t think we’re there yet. If anything, the rise of tools like Lyria 3 is probably going to drive a massive premium on live performances and “imperfect” human recordings. When literally anyone can generate a perfect 30-second R&B beat in their sleep, the market value of that specific beat basically drops to zero. What remains valuable is the story, the actual person behind the instrument, and that shared, messy experience of a live show where things can go wrong. We are likely entering an era where music is split into two very distinct categories: “Utility Music” (which AI will absolutely dominate) and “Artistic Music” (which will remain a human stronghold for the foreseeable future).

But for the hobbyist—the person who just wants to make a goofy song for their partner’s birthday or a custom soundtrack for their summer vacation vlog—Lyria 3 is a genuine gift. It removes the technical gatekeeping that has kept so many people from ever playing around with sound. It makes the act of making music as accessible as sending a text message. And that, despite the occasionally corny lyrics and the 30-second cap, is something I think is worth turning the volume up for. It’s about play, and play is where innovation starts.

Can I use Lyria 3 to make a full-length song?

Not quite yet. Right now, Gemini is strictly limited to generating 30-second clips. While you have the ability to remix those tracks and get really specific with the style and instrumentation, the tool is currently designed for short-form content and “sketches” rather than full, radio-ready singles. However, if you look at the trajectory Google’s audio models have taken over the last year, it’s a safe bet that longer durations are on the horizon.

Is the music I generate with Gemini copyrighted?

This is a bit of a legal “Wild West” right now. While Google allows you to use these tracks within its own ecosystem—like for your YouTube Shorts—the actual copyright status of AI-generated content is still being heatedly debated in courts all over the world. Furthermore, you should remember that all Lyria 3 tracks come with that SynthID watermark to ensure they are always identified as AI-generated, which might affect how they are treated by various platforms.

What languages does Lyria 3 support?

As of February 2026, this feature is open to users 18 and older who speak English, Spanish, German, French, Hindi, Japanese, Korean, or Portuguese. It’s a pretty wide net for a launch, and Google has already hinted that they’ll be rolling out support for even more languages later this year to make it a truly global tool.

The rollout for Lyria 3 is officially starting today. If you’re over 18 and you’ve got a Gemini account, you can jump in and start “composing” your own tracks right now. Just a fair warning: don’t be surprised if your silly song about a lonely sock ends up being a strangely catchy earworm that stays stuck in your head way longer than those 30 seconds. That’s the real power of the algorithm—it seems to know exactly what we like, even when we can’t quite explain why we like it.

This article is sourced from various news outlets. Analysis and presentation represent our editorial perspective.